I have a data subscription and have been analyzing exported data from a Telraam unit (xlsx files downloaded from the web site).

I noticed that if I filter the date range on the public Telraam web page to cover a single day, the total that I get for bikes is higher than the number I get by computing the sum of “BikeTotal” over the same day.

For example the web page for this Saturday indicates 521 two wheelers, whereas the sum of the BikesTotal column for the same day gives only 496.

In general I have been working with single day time spans, and get a similar result for any chosen day. I have also been focusing on bikes/two-wheelers so am not certain if this also happens for other vehicle types.

Does this correspond to a known feature of the platform? If it seems wrong, I’m happy to provide some example data and minimal code example that replicate the issue.

Thanks in advance for any advice!

Hi Andy - I’m happy to try to help and debug this, but at first glance I can’t make this out as the data for this Saturday on your device shows only 277 two-wheelers so I’m not sure which data I am looking at.

Can you point me to the exact date you are reviewing and seeing a difference?

Thanks Rob!

The data in question were actually from the second Telraam unit installed on our street (segment 9000007489).

For segment 9000007290, Saturday’s count on the public Telraam portal was 277 as you say. However, the sum of the exported ‘BikeTotal’ column for that date appears to be 270 (similarly lower than the web portal value).

Hmm… there may be something wrong with your code maybe?

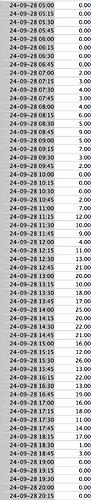

If I take a look at 28 September two-wheeler data in the xls export file, I get the following hourly totals:

| 2024-09-28 00:00 |

0 |

| 2024-09-28 01:00 |

0 |

| 2024-09-28 02:00 |

0 |

| 2024-09-28 03:00 |

0 |

| 2024-09-28 04:00 |

0 |

| 2024-09-28 05:00 |

0 |

| 2024-09-28 06:00 |

0 |

| 2024-09-28 07:00 |

12 |

| 2024-09-28 08:00 |

24 |

| 2024-09-28 09:00 |

17 |

| 2024-09-28 10:00 |

2 |

| 2024-09-28 11:00 |

38 |

| 2024-09-28 12:00 |

49 |

| 2024-09-28 13:00 |

65 |

| 2024-09-28 14:00 |

88 |

| 2024-09-28 15:00 |

67 |

| 2024-09-28 16:00 |

72 |

| 2024-09-28 17:00 |

59 |

| 2024-09-28 18:00 |

28 |

| 2024-09-28 19:00 |

0 |

| 2024-09-28 20:00 |

0 |

| 2024-09-28 21:00 |

0 |

| 2024-09-28 22:00 |

0 |

| 2024-09-28 23:00 |

0 |

The sum is 521 which is the same as on the website. Are you extracting the data somehow? Is your date / timezone correct maybe?

Ok thanks for cross-checking. It looks like the issue might be with the 15 minute count intervals that I exported (see attached). When I use the same code with 60 minute time bins (re-downloaded from the web page) I get the same result as on the web portal (521).

Perhaps I do have a bug on my end, but it appears that the counts provide a different sum depending on whether they are binned into 15 minute intervals or 60 minute intervals.

I don’t think it’s a time zone issue, as I seem to get the expected bunch of zeros before and after the daytime counts.

I see. OK, there is actually a difference between these two in some cases if this is the data you are comparing.

The 15-minute data is the report of the actual totals received from the S2 device each quarter hour. Unfortunately, the device has no ‘memory’ and needs to send this data each 15 minute time slot, and if, for any reason, it fails (usually a signal quality issue), then that data is not recorded.

We account for this in the reported hourly totals for the public data (mainly because our original V1 device had more variable uptime), and so we gave a more complete picture of traffic by scaling or extrapolating data across an hour based on the uptime percentage for that hour.

In the case of the S2, if we don’t have data for 1 quarter, we show a 75% uptime, and the totals for the other quarters are then scaled to compensate when we report an hourly figure. If we lose more than 1, then you will also see that the graph on the website shows “poor quality” and is faded.

We report this in the API and the extracted data in the column ‘uptime’ if you want to account for this:

| 2024-09-28 12:00 |

0.999444444 |

| 2024-09-28 13:00 |

0.999444444 |

| 2024-09-28 14:00 |

0.998888889 |

| 2024-09-28 15:00 |

0.999722222 |

| 2024-09-28 16:00 |

0.749722222 |

| 2024-09-28 17:00 |

0.999722222 |

| 2024-09-28 18:00 |

0.749444444 |

In this case, we are missing data for a couple of periods in the 15 minute data (at 16:00 and 18:00) on the 28th so your 15-minute totals will not match the hourly reported totals for that hour.

As you will see, most hours and days will show 99%+ uptime but if you notice it is less, then you can also try to compensate in your code if you need this resolution?

Thanks for the detailed explanation!

That makes sense and should not be an issue for our application.

We started off with the 15 minute data mainly to get a sense for the variability, and this has indeed helped understand the underlying process. In general, the hourly counts are more convenient and useful anyway.

1 Like

Hi - I was going to put this as a new topic, but it might make more sense as a followup on this thread.

The earlier discussion related to hourly ‘Two Wheeler’ counts as displayed on the public segment web page. Is an implicit uptime correction also applied for hourly ‘BicycleTotal_10Modes_’ counts in the exported spreadsheet files?

Also, would it be correct to assume that the meaningful values for hourly uptime are effectively limited to zero, one quarter, a half, and one? The many decimal places of precision could imply otherwise but it seems like this might just be an artifact of the export. If I understand correctly, uptime has no relevance in the context of a 15-minute time bin.

Edit: this should be easy to check by comparing 15-minute counts with hourly counts in two separate exports, so no rush. Just want to make sure I’m understanding this correctly.

Thanks again!